How Cloud TPU v5e accelerates large-scale AI inference

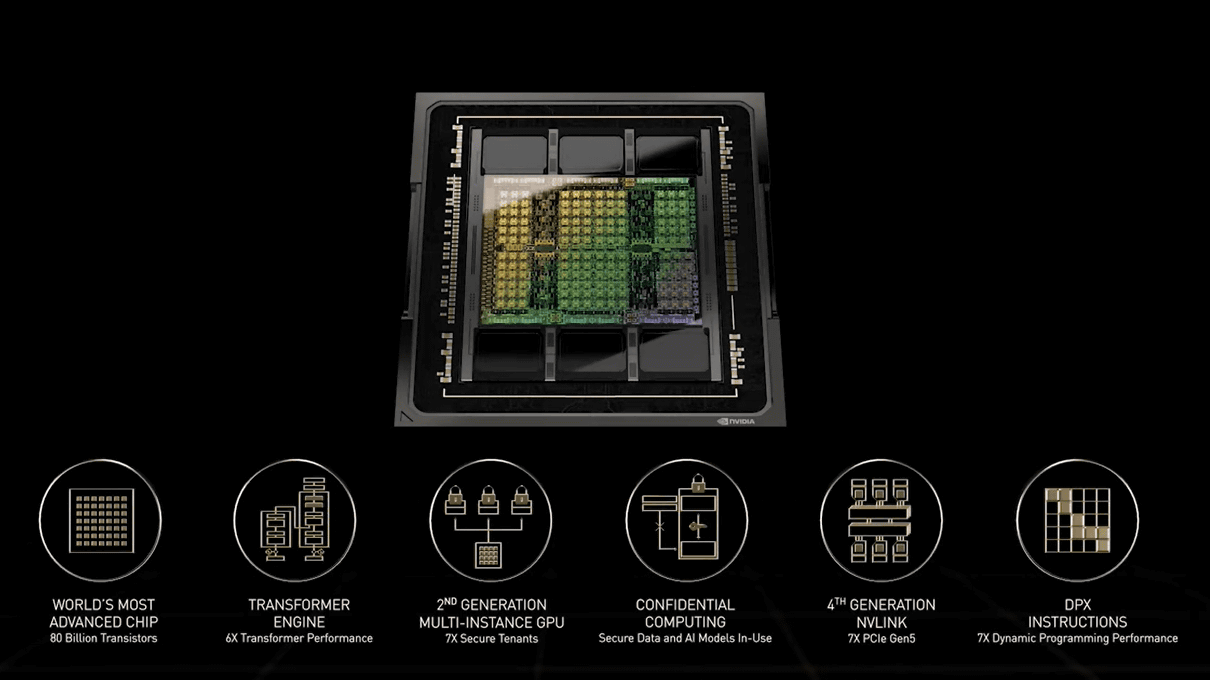

NVIDIA announces next-generation GPU architecture 'Hopper', AI processing speed is 6 times faster than Ampere and various performances are dramatically improved - GIGAZINE

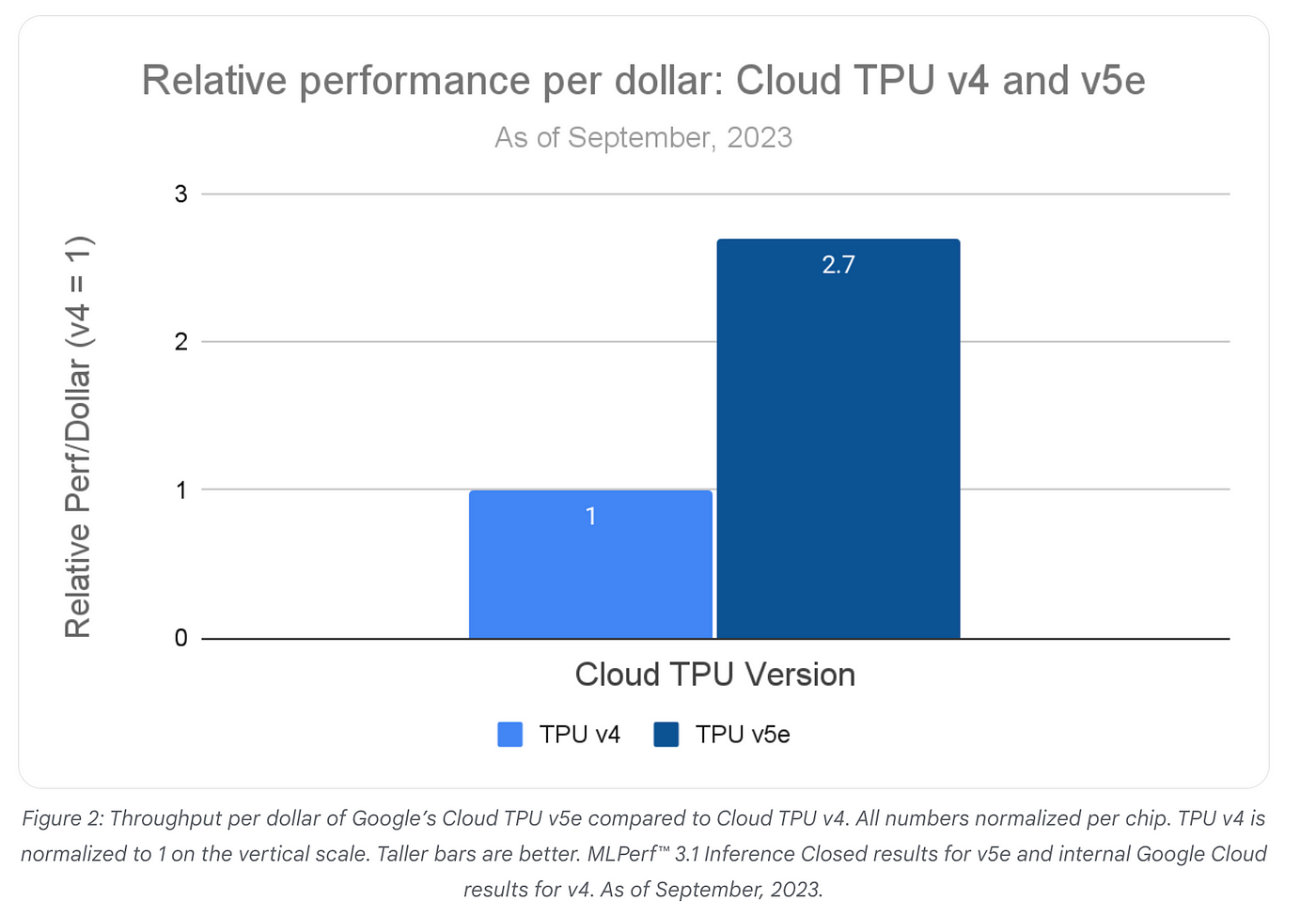

Cost-efficient AI inference with Cloud TPU v5e on GKE

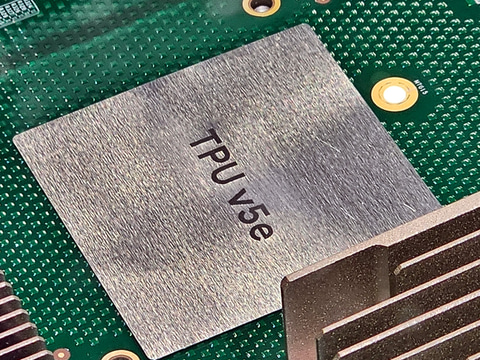

Google's next-generation AI chip released, Jeff Dean: It's even harder to improve AI hardware performance

Can Nvidia Maintain Its Position in the AI Chip Arms Race? - moomoo Community

How Cloud TPU v5e accelerates large-scale AI inference

Google announces the 5th generation model 'TPU v5e' of the AI specialized processor TPU, up to 2 times the training performance per dollar and up to 2.5 times the inference performance compared

🧨 Accelerating Stable Diffusion XL Inference with JAX on Cloud TPU v5e

Democratizing AI: How GKE Makes Machine Learning Accessible, by Abdellfetah SGHIOUAR, Google Cloud - Community, Dec, 2023

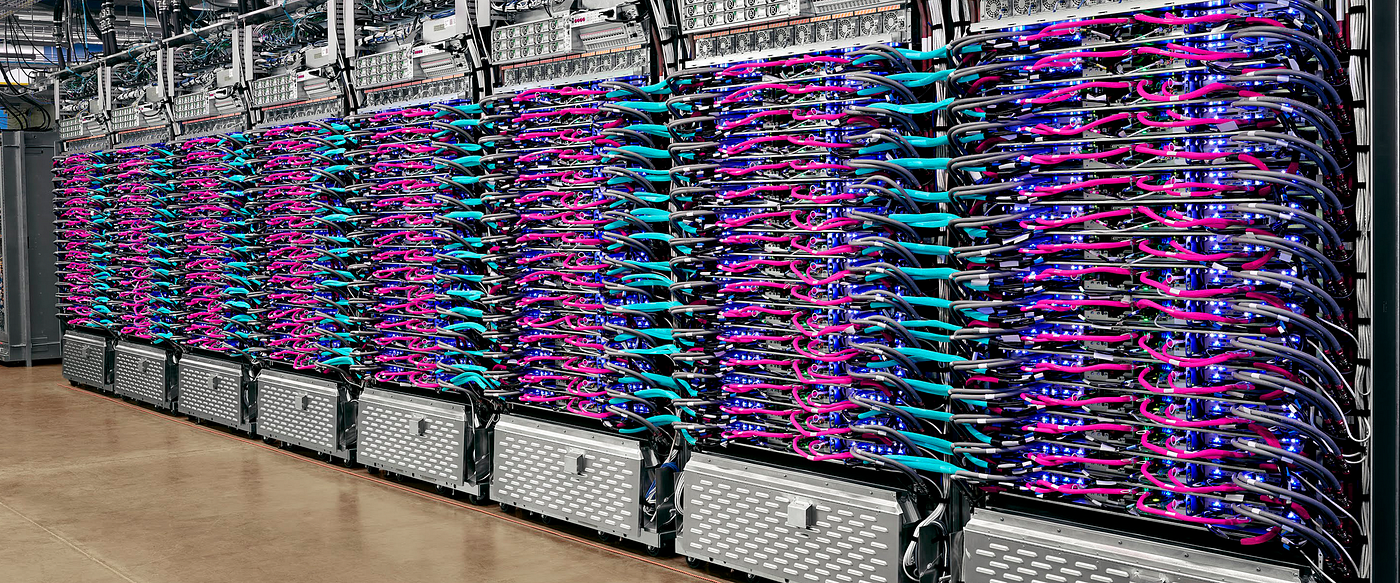

Google Cloud Platform Technology Nuggets — Sep 1–15, 2023 Edition, by Romin Irani, Google Cloud - Community

Introducing Cloud TPU v5p and AI Hypercomputer

Taking AI #INFRA - 1 - Securitiex on Substack