Samsung's New HBM2 Memory Has 1.2 TFLOPS of Embedded Processing

Page 252 – Blocks and Files

Hardware for Deep Learning. Part 4: ASIC, by Grigory Sapunov

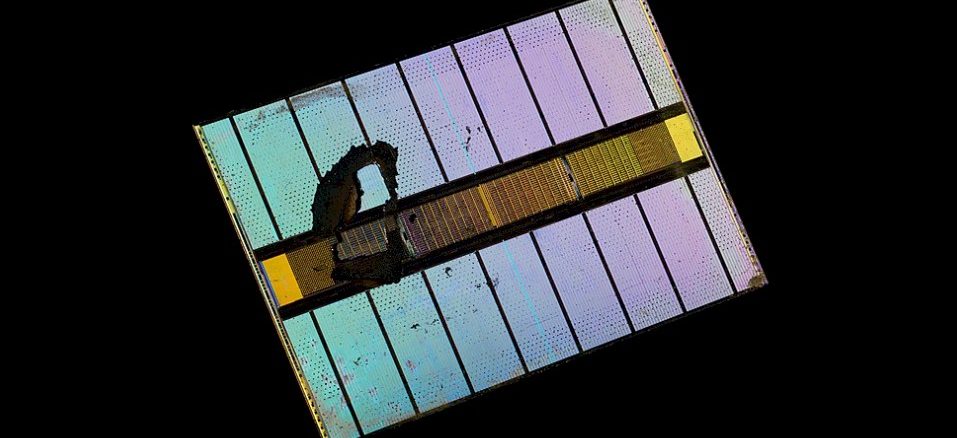

Samsung Begins Mass Producing World's Fastest DRAM – Based on Newest High Bandwidth Memory (HBM) Interface – Samsung Global Newsroom

Hardware for Deep Learning. Part 4: ASIC, by Grigory Sapunov

AMD & others --- Intel dominance in 2022

Processing-in-Memory

AMD, TSMC & Imec Show Their Chiplet Playbooks at ISSCC - interesting piece on AMD's chiplet technology and more : r/AMD_Stock

Samsung ramps up 2.5D packaging capacity, eyeing Nvidia AI GPU orders

The Third- to Fifth-Era GPUs

What Faster And Smarter HBM Memory Means For Systems